Download PDF Excerpt

Rights Information

Leaders Make the Future, Third Edition 3rd Edition

Ten New Skills to Humanize Leadership with Generative AI

Bob Johansen (Author) | Jeremy Kirshbaum (Author) | Gabe Cervantes (Author)

Publication date: 03/04/2025

Over the next decade, all leaders will be augmented with some form of generative artificial intelligence, or GenAI. For the best leaders, this will mean dramatic improvement. For mediocre leaders, this will mean persistent confusion, distraction, and pretense. With futureback thinking—looking ten years ahead, then planning backward from future to next to now—this third edition of Leaders Make the Future shows how people can improve their leadership skills while expanding their human perspective.

Now 75 percent revised and expanded with resources from the Institute for the Future, this new edition is organized around ten future leadership skills:

Augmented futureback curiosity

Augmented clarity

Augmented dilemma flipping

Augmented bio-engaging

Augmented immersive learning

Augmented depolarizing

Augmented commons creating

Augmented smart mob swarming

Augmented strength with humility

Human calming

AI-augmented leadership will be key for any organization to tackle the uncertainty of the future. And by incorporating practical methodologies, ethical guidelines, and innovative leadership practices, this book will help leaders develop their clarity and moderate their certainty.

Find out more about our Bulk Buyer Program

- 10-49: 20% discount

- 50-99: 35% discount

- 100-999: 38% discount

- 1000-1999: 40% discount

- 2000+ Contact ( bookorders@bkpub.com )

Over the next decade, all leaders will be augmented with some form of generative artificial intelligence, or GenAI. For the best leaders, this will mean dramatic improvement. For mediocre leaders, this will mean persistent confusion, distraction, and pretense. With futureback thinking—looking ten years ahead, then planning backward from future to next to now—this third edition of Leaders Make the Future shows how people can improve their leadership skills while expanding their human perspective.

Now 75 percent revised and expanded with resources from the Institute for the Future, this new edition is organized around ten future leadership skills:

Augmented futureback curiosity

Augmented clarity

Augmented dilemma flipping

Augmented bio-engaging

Augmented immersive learning

Augmented depolarizing

Augmented commons creating

Augmented smart mob swarming

Augmented strength with humility

Human calming

AI-augmented leadership will be key for any organization to tackle the uncertainty of the future. And by incorporating practical methodologies, ethical guidelines, and innovative leadership practices, this book will help leaders develop their clarity and moderate their certainty.

1 Generative AI Will Be a Leadership Gamechanger

Generative AI is a social collaboration medium that uses digital tools to craft new language, media, images, code, and music from existing patterns.

GenAI is brewing a new kind of digital alchemy that will stretch both leaders and leadership.

GENAI HAS BEEN a very long time coming. The term “artificial intelligence” (AI) was coined in 1956.

GenAI is still emerging, and we still don’t have good language to describe the phenomenon that will disrupt leaders and leadership over the next decade. To understand the direction of change, you need to think futureback.

This is a time for thinking futureback with curiosity and humility. Who would have thought that humans would have such a weird and superpowerful digital medium that is good at poetry and bad at math?

Many forms of machine learning and AI have been around for years, and some have become commonplace within organizations. Many leaders use some form of AI already, sometimes without even being aware they are using AI. Machine learning or AI for fraud detection, shopping recommendations, transit route planning, and a hundred other things are so commonplace as to be nearly invisible. Generative AI is different and new, however, with profound implications for leaders.

How Will GenAI Augment Leaders?

Douglas C. Engelbart was one of the pioneers of the computing movement writ large, inventing the mouse, the graphical user interface, collaborative software, and a whole host of other innovations we take for granted today—and some that have yet to become practical. He was driven by a deep desire to improve the capacity of people to learn and create. The vision he laid out in his 1962 essay “Augmenting Human Intellect: A Conceptual Framework” still rings true for us:

We refer to a way of life in an integrated domain where hunches, cut-and-try, intangibles, and the human “feel for a situation” usefully co-exist with powerful concepts, streamlined terminology and notation, sophisticated methods, and high-powered electronic aids.1

Being augmented means the ability to extend one’s capabilities and intelligence while being even more human—and in ways that will have a dramatic impact on the daily lives of people in all walks of life.

Engelbart was a neighbor of the Institute for the Future (IFTF) for years, and we had many exchanges with him and his team from the SRI Augmentation Research Center, which also served as the first library for the ARPAnet. Bob remembers talking about “nonhuman participants in team meetings” in the 1980s, and these ideas were introduced in his book Teleconferencing and Beyond: Communications in the Office of the Future.2 In 1988, Bob wrote Groupware: Computer Support for Business Teams that included a scenario called “Nonhuman Participants (Support for Electronic Meetings).”3 Groupware was dedicated to Doug Engelbart and Jacques Vallée, who departed Engelbart’s group to lead groupware development at IFTF.

Now, many years later, this foresight is finally becoming scalable. A new wave of change is swelling with generative AI and large language models. GenAI will stretch the abilities of leaders in new and necessary ways. A key benefit of GenAI for leaders is to stretch your thinking. However, stretching can be both good and bad for leadership.

GenAI is fundamentally about creating new things and new data. It can involve search, analysis, or summarization, but in a way that produces new data that never existed before—where something new is produced that will result in a story, an action, a program, a warning.

As futurists, we have learned that if you get your language right, it will draw you toward the future. If you get your language wrong, you will fight the future. In our fifty-plus years of studying foresight, “artificial intelligence” is the worst term we have encountered to describe an emerging technology.

Artificial intelligence implies replacing humans with computers, which may happen in specific ways. This framing of AI starts a sidetrack conversation that kindles more fear than hope. The big story, though, as Thomas Malone at MIT argues,4 will be computers and humans working together to do things that have never been done before. Malone uses the term “superminds” to describe humans augmented by AI, which we find to be a much more hopeful framing.

“Augmented intelligence” is a more accurate term than AI, as is “generative intelligence.” But we still have a language gap.

How to Describe the Emerging Generative AI Phenomenon

Benjamin Bratton is directing a new research program on the philosophy of computation. He and Blaise Agűera y Arcas write about the challenge of language to describe what is possible now and, more importantly, what will become possible:

AI as it exists now is not what it was predicted to be. It is not hyperrational and orderly; it is messy and fuzzy. … Perhaps the real lesson for philosophy of AI is that reality has outpaced the available language to parse what is already at hand. A more precise vocabulary is essential.5

Instead of a precise vocabulary, we are left to ponder the off-putting term “artificial intelligence.”

Jaron Lanier, one of the pioneers in this area (whatever we choose to call it), gives us his advice:

As a computer scientist, I don’t like the term “A.I.” In fact, I think it is misleading—maybe even a little dangerous. Everybody’s already using the term, and it might seem a little late in the day to be arguing about it. But we’re at the beginning of a new technological era—and the easiest way to mismanage a technology is to misunderstand it.6

Lanier suggests calling this emerging tool set “an innovative form of social collaboration.”7

Large language models will disrupt leadership, but it is not obvious—even to their developers—how they work and what to call what they do. What is clear is that they are unprecedented and will reshape the very nature of leadership.

In this book, we will try out new ways of describing these mysterious technologically enabled phenomena. Our goal is to develop a roadmap for augmented leadership, thinking ten years ahead, plus a step-by-step pathway for leaders to augment themselves. We prefer the term “augmented intelligence” to artificial intelligence.

Despite its inadequacies, the term AI is commonplace and therefore an effective and clear shorthand for what we are talking about. We went back and forth on whether to try to coin new descriptions for “AI,” “thinking,” “reasoning,” “knowledge,” and all the similar terms that don’t quite fit with what is happening on our computers but look a lot like it. Ultimately, we thought this would be distracting to the reader. So, despite the term’s weaknesses, when it is the best term to convey what we mean, we will go ahead and say “AI.”

Early Flight and AI—A Metaphor

In many ways, early flight is an interesting metaphor for this phase of generative AI and large language models. Right now, we can see that generative models are doing interesting, sometimes even useful, things. Some of the things they do look a lot like what we currently call “intelligence” and “understanding” when they occur in a human or animal. However, no one, even the people making the models, has a strong theoretical explanation for why they are doing this. It was the same when human-powered flight began. This might sound strange, but the concepts we use to explain how flight works, like the idea of “lift,” were developed decades after the Wright brothers’ first successful human-powered flight. Amazingly, understanding why something works isn’t a prerequisite to inventing it. If you want more detail on the timeline of human flight’s invention and the advancement of its undergirding theory, some academic sources have been provided in the footnotes, but this happens more often with new technologies than you might assume.8

Jeremy thinks this era of early flight is a useful allegory for where we are at today with generative AI. Because we built generative neural networks, we have a detailed understanding of how they work, but we don’t have any good explanations for why. Luckily, this isn’t a permanent feature of generative models. Scientific breakthroughs are already being made that are leading us in the direction of deeper understanding of generative models’ behavior.

The invention of human-powered flight is also an interesting grounding when we think about the difference between the historic myth of flight and its reality. The Wright brothers were not at all the first to imagine humans flying. The myth and aspiration for it had existed for centuries. In most of these imaginations, human flight looked like other kinds of flight that existed—like how a bird or insect flies—with flapping wings and feathers. This wasn’t, ultimately, what ended up working best for transporting humans through the air. At least, not exactly. Airplanes do have wings like birds, but they don’t flap or have feathers.

Something similar is happening with generative AI models. Myths and stories of intelligence emerging from inanimate objects have existed for centuries. Stories of human-like intelligence in machines have existed for decades. But just as progress in flight did not mean that airplanes became more like birds, progress in AI will not mean that AI models will become more like people. Today’s AIs, including generative models, do not think like humans do. Asking when AI is going to think like a human is like asking when planes are going to fly like birds. The answer is: probably never, why would they?

Even as we’re trying to work these simple baselines, our language is already getting in the way. Human-powered flight is different from flight in the natural world, but we decided to just proceed with using the same word for it. We need more words to talk about what it is that generative AI is actually doing. A lot of what generative AI does certainly looks like “thinking” and “understanding” or “reasoning.” We know that what is happening inside the large language models does not look like what happens inside the human brain. Many people point this out to demonstrate that the models do not “understand” or “think.” We agree to some extent, but this makes it difficult to talk about what they actually are doing. If we want to say that an airplane isn’t really “flying” because it’s not flapping its wings, that is true in a way. But how do we talk about the fact that an airplane is up in the air and moving?

This created a dilemma for us as authors. Do we create new words for what AI is doing? For instance, do we define “learning” as something only a human does, and when a generative model takes in information and forms patterns from it, we make up a word to describe it? This might make our language more precise, but it might also bog down the reader with new definitions and jargon. Or do we use the terms that we already use for humans, and risk the misleading anthropomorphization that might result? There isn’t a perfect solution to this—it is a dilemma, not a problem. For this book, we found trying to invent new words for generative models’ behavior too distracting from our message. We’ve decided to continue to use anthropomorphic words like “learning,” “understanding,” and “reasoning” to describe the behavior of AI, and we trust the reader to know that we do not mean it is performing these things the way a human would.

Augmenting Leadership with GenAI

The best future leaders will be extended and enhanced in digitally savvy but humane ways. The already-simmering question is how leaders will want to be augmented to thrive in the future. This third edition of Leaders Make the Future tells a provocative futureback story from ten years ahead to help leaders develop their own clarity and commitment in the present. Foresight ignites curiosity. What leadership mindset, disciplines, and skills will need to be augmented in which ways?

How can human leaders evolve into cyborgs with soul?

The goal of augmented leadership is to develop and refine your clarity—while moderating your certainty. For leaders, augmented intelligence can be a “thought partner,” a “foresight generator,” or a “boundaries pusher.” But it is better for exploring options than providing answers. This is the essence of strategic foresight: exploring questions that we have yet to ask instead of always assuming that there is a single correct answer.

This book will equip leaders with actionable knowledge and skills for implementing AI in their own leadership. It will foster a paradigm shift toward accepting and utilizing AI as an essential leadership tool. It will promote ethical, empathetic, and sustainable leadership in the emerging AI-augmented digital age.

Narrow forms of machine learning can be thought of in many ways as variations on data analysis and statistical methods. Their job is to analyze data and predict something based on that data.

Generative AI is very different. Although we may leverage some similar methods to narrow machine learning when creating generative models, the goals, function, and purpose are different.

With generative AI, the goal is to create new things. New text, images, videos, audio—even new actions. Its goal is not to predict (just as the goal of foresight is not to predict) but to expand, expound, elaborate, and explore—all critical tasks for senior leadership.

GenAI and Top Leadership

Because of these capabilities, generative AI will disrupt senior leadership in profound ways. The job of a senior leader is difficult to define. If a problem or dilemma is not messy and intractable, it will rarely filter up to a senior leader. Being an effective senior leader requires a unique blend of skills, literacies, and mindset. GenAI will disrupt all of these.

This is not to say that senior leaders will be replaced—far from it. But with GenAI, how leaders lead will change dramatically. This comes with enormous opportunities and frightening risks. GenAI could amplify or degrade a leader, depending on how it is used.

For future-focused and effective leaders, GenAI will be able to scale their clarity across their organization in yet-to-be-told ways. But it will be harder than ever for organizations to absorb or prop up mediocre or bad leaders.

If you are a mediocre leader, congratulations! You will have the power to spread your mediocrity across your organization with unprecedented efficiency. This raises the already-high bar for senior leaders even higher. The ten future leadership skills already outline how to be future-ready, and we have added new thoughts on how augmentation will accompany, extend, and sometimes disrupt these skills. We know GenAI will be a giant disruptor for leaders, even though we don’t yet have an accurate language to talk about it.

At some point, everyone will realize the significance of these technological capabilities, provided they are paying attention. For coauthor Jeremy Kirshbaum, it happened to be in 2019. He was leading a small team of innovation consultants and encountered GPT-2 while doing a custom forecast with IFTF. (GPT-2 was the language model from OpenAI that preceded ChatGPT.) At that time, language models were less sophisticated but already capable of creating ideas, simple frameworks, or poems. This was startling to Jeremy, because for the first time, the computer was doing what he did. Coming up with ideas, not just aggregating information, is the core value of innovation consulting. Jeremy saw glimmers of augmentation potential in GPT-2, BERT, and the other language models emerging in 2019. In the sense that his job was to write and come up with ideas, he had the distinct feeling that he was an old farmer digging with a shovel until someone showed him the first tractor.

Jeremy was overwhelmed with this thought: “I need to start learning how to use tractors right now.” He began a headlong dive into learning how to augment himself with GenAI.

Jeremy started working more with GPT-2 and then its descendant, GPT-3, when it came out in private beta in early 2020. He realized, though, that the language model was only as good as the person using it. If someone was good at poetry, they could create beautiful poetry with it. If someone was good at expository writing, they could coax even better writing from it. It was not, however, able to turn a bad poet into a good poet or a bad writer into a good writer.

Extending the farming metaphor—if you don’t know how to farm, you can sit in a tractor all day and the crops are not going to grow. If an expert farmer is driving the tractor, then it is a powerful augmentation.

This will be the case for generative AI for the foreseeable future. The limiting factor of GenAI for leaders will be their own ability to understand and know what “good” looks like. It will be a long time before any digital system can lead for us, far more than a decade from now—if then. Right now, AI cannot be trusted any more than the person using it. Leaders will be augmented, but only rarely will they be automated.

Today, some people use AI-powered grammar checkers and generally trust them more than their own judgment to check grammar. Generative AI in the next decade will become reliably better than humans at tasks far more complex than grammar. Not so with the job of a senior leader. If senior leaders find that parts of their job can be automated with AI, then they probably shouldn’t have been in top leadership positions in the first place.

Present-Forward versus Futureback

Leaders must make choices over the next decade to bring about the positive change potential of GenAI, and they must act with intention, discernment, and self-control.

Tables 1 and 2 summarize the shifts in how leaders will perceive and use GenAI over the next decade.

Many of the present-forward views are things that may seem attractive to leaders at first, but each has hidden dangers and unintended consequences. Some of them are important to focus on in the near term, but it is a trap to believe they are the whole story. The future-back view offers a way to think about the same things in a more holistic way that goes beyond the dilemmas of the present.

Here are our definitions for each of the elements of the present-forward and futureback views of generative AI for senior leadership.

TABLE 1. Present-Forward (Current) Priorities for GenAI Compared to a Futureback Forecast of Where GenAI Will Provide Most Value for Leaders

PRESENT-FORWARD VIEW |

FUTUREBACK VIEW |

Efficiency and speed |

Effectiveness and calm |

Prompts and answer-finding |

Mind-stretching conversations |

Automation |

Your augmentation |

Certainty seeking |

Your clarity story |

Personal agents |

Human/agent swarms |

Guardrails needed |

Bounce ropes needed |

Avoiding hallucinations |

Meaning-making |

Increasing secular control |

Re-enchanting our world |

TABLE 2. Direction of Change for GenAI and Senior Leadership

FROM PRESENT-FORWARD TO FUTUREBACK |

DIRECTION OF CHANGE |

From efficiency and speed toward effectiveness and calm |

While efficiency (doing things right,9 according to management guru Peter Drucker) is attractive, the long-term value of GenAI for leaders will be effectiveness (doing the right things). Meaningful outcomes will be more important than streamlined processes—although it may be possible to do both. Leaders will need to discern which efficiency tools contribute to effectiveness as well. Leaders will need to resist the temptation of taking shortcuts that sacrifice long-term effectiveness for short-term gains. The early applications of GenAI have focused on efficiency and getting work done more quickly: make things work faster with fewer mistakes. Offload the busywork that humans don’t want to do. Replace humans when possible. Doing the same things faster is just the beginning. Over the next decade, machine/human teams will figure out ways to do better things better. |

From prompts and answer-finding toward mind-stretching conversations |

GenAI will be so much more than a question-and-answer machine for information retrieval. The exchange between a human and GenAI should be more like a conversation—not just a series of human prompts followed by computer answers. Like human conversations, leaders will get the most value from deep dynamic interactions with GenAI over time. GenAI’s response to a single prompt is rarely very interesting. Discerning leaders will prompt deeper conversations and engagements that are much more likely to yield value, but these conversations could go on for a long time. By “conversation,” we don’t just mean words—far from it. Even in a conversation between two humans, much more than just words are exchanged. Over the next decade, conversations between humans and GenAI systems might involve iteratively creating applications, systems, videos, or virtual worlds. By conversation we mean they will be iterative, durational, and evolve over time. |

From automation toward your augmentation |

The urge to automate is tempting, and some processes do lend themselves to automation. On the other hand, overly relying on automation is a danger that will increase with GenAI. Automating processes that should not have been done in the first place will be counterproductive, annoying, and perhaps even dangerous. Automation is the use of technology to perform tasks with little or no human intervention. Augmentation, on the other hand, begins with human abilities and asks how they could be amplified or extended with technology. The goal of automation is to reduce human involvement. The goal of augmentation is to enhance human involvement. Our conclusion in this book is that thinking futureback, all leaders will be augmented—but few leadership roles will be automated. |

From certainty seeking toward your clarity story |

The best leaders will develop their clarity but moderate their certainty. In a BANI future that is brittle, anxious, nonlinear, and incomprehensible, certainty will be both impossible and dangerous if attempted. For some people, the chaotic uncertainties of the BANI future will be too much for them to handle. There will always be extreme politicians, religious leaders, and other simplistic thinkers who say they can offer certainty in an increasingly uncertain world. Unfortunately, there will also be people who believe them. In a BANI future, leaders cannot be certain, but they must have clarity. In fact, leaders will need their own personal clarity story in order to thrive. |

From personal agents toward human/agent swarms |

GenAI agents bridge ideas to action. Over the next decade, individual agents will be organized into agents for collective actions. These human-computer partnerships will create new kinds of collaborative intelligence, leveraging both human and machine capabilities. As leaders learn how to use their own agents, it will profoundly affect how decisions are made, how teams collaborate, and how strategies are developed. As individual agents become part of orchestrated swarms of humans and agents, things will get quite complicated. When it all works well, expect enhanced collaboration and increased resilience. Complex scenario simulations will become possible, along with innovative solutions. The complexity and distribution of power will also increase the risk of governance issues and ethical challenges. The power of individual contributors in an organization will increase dramatically as they can call to their disposal complex agent systems to aid in their work. But when things go wrong, liability will be complicated. |

From guardrails toward bounce ropes |

Bounce ropes encircle wrestling rings to keep the wrestlers safe in a strong yet flexible way. It is even possible for a wrestler to bounce off the rope as it flexes back. For a technology as emergent as GenAI, bounce ropes are a much more appropriate metaphor than guardrails. Some constraints will be desirable, but they will have to be flexible as the reality of GenAI comes to life. Calls for public policymakers to create guardrails for GenAI are understandable but naive. Not even GenAI developers understand exactly what is going on within these large language models—let alone the implications of large-scale use. Policymakers and elected officials are likely to be uninformed or misinformed about these emerging tools and media, even if they are well-intentioned. We expect many unintended consequences from even the most prescient policies. Unexpected and often inappropriate actors are likely to step into any vacuum. |

From hallucination toward meaning-making |

A GenAI hallucination may contribute to a human’s creativity. In the early days of GenAI, people were disturbed if an AI “made up” an answer—especially if the system stated it confidently without any hint that it might be incorrect. As conversations between GenAI and humans go deeper, however, such out-of-the-box thinking will become part of a human-driven but GenAI-fueled process of creative meaning-making. What we call “hallucinations” are a feature of GenAI systems, not a bug. At Institute for the Future, we hire people with very strong academic backgrounds, but we want people who fail gracefully at the edge of their expertise. As futurists, we are often at the edge of our expertise. How do you fail gracefully? First, you acknowledge that you are at the edge of your knowledge. Then, you do things like asking questions, drawing analogies that might help explain where you are, or perhaps use models or orient yourself to the unknowns around you. The danger is pretending you know something when you do not. Strong opinions, strongly held, will be dangerous. Similar danger will arise with GenAI. Fabrications can be very useful if they are labeled as such and used as part of an exploratory exercise like scenario planning. Most of today’s GenAI systems do not yet fail gracefully. |

From increasing secular control toward re-enchanting our world |

The urge to control is tempting, but the ability to control is limited. GenAI has come of age in an increasingly secular world. On the surface, GenAI seems to be a secular technology. But what if GenAI can help us explore the mysteries of life—as well as the certainties? What if GenAI can help us explore the invisible—as well as the visible? Most of today’s world seems disenchanted and increasingly secular. How might GenAI help to re-enchant our world? MIT computer scientist David Rose coined the term “enchanted objects” to describe how AI will allow ordinary objects to do extraordinary things—like an umbrella that lights up when rain is forecasted or a pill bottle that reminds you to take your pill.10 As Arthur C. Clarke said: “Any sufficiently advanced technology is indistinguishable from magic.”11 |

GenAI Agents Will Come Next

In the next decade, AI agents will become more and more reliable as systems for accomplishing tasks in the digital world. When we say “agent,” we mean a system that takes actions in the digital world to accomplish an objective without the steps to complete that task being deterministically predefined. An agent is an AI that makes decisions and takes actions. Such tasks could be more automated and may or may not require human intervention.

Within the next few years, agents will become reliable and useful for completing tasks. For example, leaders could speak or write, “Copy all of these emails from this document into a spreadsheet, draft an email to each of these people inviting them to an event next week, and send the email.” This ability will lead to broader tasks with greater analytical depth: “Go through all of my documents from the last five years and look at which of them resulted in greater client success.” Some common versions of these kinds of agents will already be gaining popularity by the time this book is published.

In the next decade, AI agents are going to routinely and automatically perform domains of tasks that make up people’s entire jobs. The economic impacts of agents, positive and negative, will far exceed that of chat-based systems.

It is less obvious how far robotics will advance in the next decade. Perhaps unexpectedly, it is much harder to use computers to do “simple” things in physical space—like flipping burgers or taking loose items out of a bin and placing them on an assembly line—than it is for them to do complex tasks in the digital world. This is sometimes referred to as “Moravec’s paradox.”12 The concept was articulated by Hans Moravec, Rodney Brooks, Marvin Minsky, and others in the 1980s, when they observed that tasks involving reasoning require relatively little computational power compared to sensorimotor skills and perception, which demand extensive computational resources.

AI agents will be a transformative shift in how we interact with digital spaces and perform tasks both mundane and complex. An agent is a system that can carry out a series of actions on a computer, including in a browser or on a desktop in such a way that the user declares what they want but not entirely how they want it. Unlike generative models and systems focused on singular tasks, these agents can execute multiple steps without constant human oversight. An agent can make some kind of “decisions” on its own in terms of the process. Robotic process automation tools (RPAs) like Zapier or UIPath, or other traditional automation tools, don’t fall into this category for us. The human user might still be involved at critical junctures, such as reviewing a tweet or a draft composed by the agent, but the user would not be consulted for every micro-decision, like opening an application or positioning a cursor. Soon, in many cases, the user will not be consulted at all.

We anticipate that the use of agents based on generative models will be entering the mainstream within the next decade, perhaps by the time this book is available on shelves. These agents will have the capability to perform tasks by executing a series of actions, with humans only intervening at specific points. This marks a departure from simpler interactions where a model executes a single task or generates some content and then awaits further instructions.

Swarms of Agents and Humans

The direction of change is toward comprehensive systems that incorporate generative models but also encompass various non-AI components. Such systems operate in the physical world or in digital realms by leveraging APIs and tools to execute tasks on behalf of a user, and they are authorized to act in such a way by the user. Their applications are vast. In 2024, agents are already able to post on social media, purchase flights, and send emails, essentially “moving around” the internet or a computer to accomplish these tasks. In the next decade, they will simultaneously carry out series of complex goals and intents that today make up entire human jobs or professions.

“Agent” is a good term because it gets across the fact that these systems are performing actions in a digital environment, as distinguished from simple image generator models or language models that produce something only at a direct request and with a single iteration before returning back to the user for additional input. A way in which the word “agent” might be misleading is that it gives some impression of consciousness or intelligence or inherent motivation, which immediately sparks concerns: “What if it gets out of control? What if it decides to have a mind of its own?”

Agents will not have a mind of their own any more than ChatGPT does. However, the question of how we manage them is more urgent because they will have direct access to many of the apps on our computer and powerful tools like credit cards, ensuring appropriate bounce ropes will be important.

The sophistication of such agents is rapidly growing, which suggests a significant shift in how software will be perceived and used. Agents will be capable of understanding instructions and executing numerous steps without direct human intervention. This evolution will fundamentally alter work dynamics, enabling the outsourcing of not only routine tasks but also complex endeavors like research, analysis, and strategic planning.

In the context of business organizations, the integration of agents introduces a new paradigm of operation. Leaders will not only manage human teams but also coordinate agents, necessitating a clarity in communication that bridges both human and digital execution. This raises important considerations around the interaction of agents, the potential complexities therein, and the crucial balance of human intervention.

Agents will stretch organizational agility. Agents will communicate context efficiently. A fluid hierarchy, complemented by a strategic deployment of agents, can augment decision-making and problem-solving processes, empowering individuals closer to issues while allowing senior leaders to concentrate on overarching strategic dilemmas. While agents will be great at solving well-defined problems (even complex ones), leaders will still be needed to handle dilemmas without clear solutions or success criteria. The human aspects of leadership around managing uncertainty will remain essential. Agents will be better suited to routine problem-solving than the leadership skill of dilemma flipping.

The advancement of agents also brings to the foreground concerns around control, especially given their direct interface with applications and potentially sensitive information, such as credit card details. Establishing secure and effective workflows is paramount to ensure that the benefits of agents are harnessed without undue risk. In the next decade, however, the greatest risks and benefits to agents will be less obvious than simple password management. Many businesses will overreact by being hyper-conservative, banning agent-based work and missing out, while others will make colossal mistakes deploying agents freely on the internet with inadequate oversight and predictably embarrassing, or even dangerous, results.

Considering how work can and should look in a world of agents is not merely about task automation but a reimagining of the interaction paradigms between humans and technology. As we contemplate the future, understanding the role of agents, their potential, and the broader implications for leadership and organizational structures becomes essential. The integration of agents points toward a future where the delineation between software and agent becomes blurred, transforming the way that tasks are undertaken and redefining the potential of digital and workplace environments.

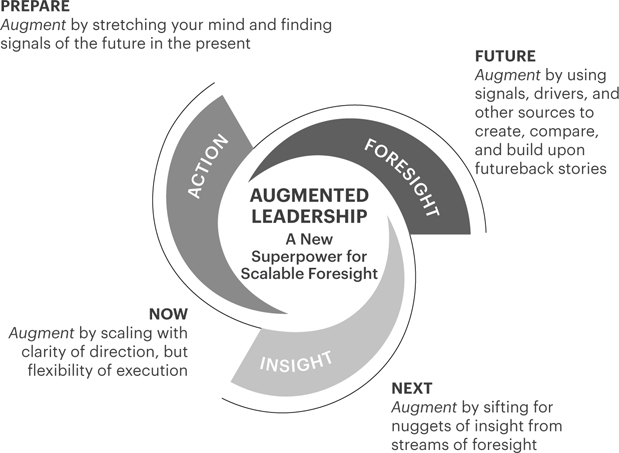

Augmented Leadership

Our belief is that top leadership is like navigating a wild river’s tumultuous waters. We view change as a complex, fluid process—unpredictable at times yet navigable with foresight, insight, and agile action. We suggest that leaders embrace futureback thinking—looking ten years ahead, then planning backward from the future to the next step to the present moment (the now).

This approach requires understanding deep trends (patterns of change that can be learned from with confidence) that will shape the future—like currents in the river. In this book, we are particularly interested in how leaders can navigate in a future that will be laced with disruptions—breaks in the patterns of change, like barely exposed giant rocks in a churning river.

Futureback thinking reveals two underlying patterns of change:

- • Drivers of change: the giant waves of change that are bigger than all of us

- • Signals of change: the small whirlpool futures that are already spinning but unevenly distributed and not yet at scale

Change isn’t a force to resist but a reality to harness. Foresight can turn looming chaos into opportunities for growth and innovation. Leaders will need to develop their own flexive intent—developing great clarity about direction while maintaining flexibility about execution—to adapt and thrive amid extreme uncertainty. Leaders must build resilience and inspire action around preferred futures while avoiding the fatal falls. The most powerful vision will be scalable foresight that flows from foresight to insight to action, as summarized in figure 2.

FIGURE 2. Summary of How GenAI Will Augment Each Stage in IFTF’s Foresight-Insight-Action Cycle. Source: Institute for the Future.